Introduction

In recent years, the rise of generative AI and large language models (LLMs) has transformed the landscape of data science. No longer limited to structured datasets and numerical models, data scientists can now leverage natural language processing (NLP) tools to extract, analyse, and synthesise information in powerful new ways. Two frameworks leading the charge in this arena are LangChain and Haystack. Both are open-source projects designed to facilitate the development of LLM-driven applications, but they take distinct approaches, making them suitable for different types of projects and users.

This blog compares LangChain and Haystack in the context of data science workflows, exploring their core features, use cases, ecosystem support, and integration flexibility. Whether you are building a retrieval-augmented generation (RAG) system or designing a custom chatbot for data querying, understanding the differences between these tools can help you choose the right one for your needs.

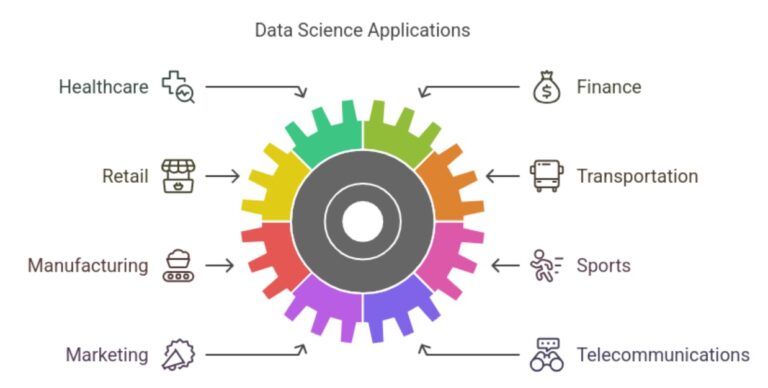

The Role of Language Model Frameworks in Data Science

Traditionally, data science revolved around numerical methods, SQL queries, and static dashboards. However, the increasing adoption of LLMs has enabled more interactive and intelligent systems. These tools can summarise reports, parse unstructured documents, answer natural language questions, and even automate repetitive analysis tasks.

Frameworks like LangChain and Haystack act as orchestration layers, helping developers and data scientists build complex LLM-powered workflows without reinventing the wheel. By handling document indexing, query routing, model inference, and integration with vector databases, these tools streamline the creation of intelligent applications.

For those enrolled in Data Scientist Classes, learning how to use such frameworks is becoming an essential skill. These tools not only demonstrate advanced NLP techniques but also provide a foundation for building real-world AI applications.

What Is LangChain?

LangChain, developed in Python and JavaScript, is designed specifically for building LLM-based applications by chaining together components like language models, retrievers, agents, and tools. It is modular and developer-friendly, supporting a wide variety of backends including OpenAI, Hugging Face, Cohere, and Anthropic.

One of LangChain’s key innovations is the concept of chains, where multiple LLM prompts and functions can be combined into a single workflow. For example, you can build a chain that takes user input, searches a document store, summarises the results, and outputs an answer—all orchestrated automatically.

LangChain also supports agents—LLMs that can dynamically decide which tool to use next based on intermediate outputs. This makes LangChain highly adaptable for data science scenarios where decisions depend on multiple inputs or conditional logic.

In addition to these features, LangChain offers tight integration with vector stores like Pinecone, Weaviate, and FAISS, making it ideal for projects involving semantic search, document classification, or question answering from knowledge bases.

What Is Haystack?

Haystack, developed by DeepSet, is another powerful framework for building LLM-based applications, with a strong emphasis on search and question answering. Initially focused on classic NLP pipelines, Haystack has evolved to support LLMs and generative AI through its integration with tools like OpenAI, Cohere, and local Hugging Face models.

Its architecture is centred around pipelines, which allow developers to define a series of components—retrievers, readers, generators, and more—that process user queries in a structured manner. Haystack also supports hybrid search (combining keyword and vector search), document ranking, and reader models that extract answers from text passages.

Haystack is particularly well-suited for production-ready applications. It offers features such as OpenSearch integration, REST APIs, and document stores that can scale efficiently. Many enterprise users prefer Haystack for building robust search interfaces or knowledge assistants that need to handle high query volumes with minimal latency.

For learners undertaking a Data Science Course in Bangalore, Haystack offers practical insight into how search systems work and how NLP can be used to solve real business problems.

Feature Comparison: LangChain vs. Haystack

Let us compare the two frameworks across several dimensions relevant to data science applications:

| Feature | LangChain | Haystack |

| Primary Focus | Application building with LLMs | Search and Question Answering |

| Architecture | Chains and Agents | Pipelines |

| Language Support | Python, JavaScript | Python |

| Vector DB Integration | FAISS, Pinecone, Weaviate, Chroma, etc | FAISS, Milvus, Elasticsearch, OpenSearch |

| API Support | SDK-focused | REST API + SDK |

| Community Support | Fast-growing GitHub community | Backed by deepset with strong enterprise focus |

| LLM Support | OpenAI, Hugging Face, Cohere, Anthropic, Azure | OpenAI, Hugging Face, Cohere |

| Ideal For | Complex reasoning, dynamic workflows | Search, QA systems, chatbots |

LangChain shines in scenarios that demand flexibility and complex LLM behaviour—such as building agents or research assistants. Haystack, on the other hand, excels at delivering robust search applications where reliability, performance, and scale are critical.

Use Cases in Data Science

LangChain Use Cases:

- Building data-driven chatbots for querying internal datasets

- Automating multi-step workflows (e.g., summarisation + sentiment analysis)

- Developing custom research assistants for scientific literature

- Creating dynamic dashboards using LLM reasoning

Haystack Use Cases:

- Enterprise document search systems

- Legal or medical Q&A tools that extract information from large corpora

- Customer support bots that retrieve accurate answers from FAQs

- Hybrid search engines for unstructured and structured content

Each of these tools fits naturally into modern data science pipelines. While LangChain’s flexibility enables creative experimentation, Haystack’s solid search infrastructure ensures consistent delivery in real-time applications.

Learning Curve and Community Support

LangChain tends to have a steeper learning curve, especially for users unfamiliar with agents or asynchronous programming. However, it is well-documented and backed by a growing ecosystem of tutorials and GitHub repositories.

Haystack, by contrast, is more structured and easier to get started with, especially if you are working on a search or QA task. It has enterprise-grade documentation, official tutorials, and dedicated support from the Deepset team.

If you are exploring advanced topics, working with both frameworks is a valuable way to deepen your understanding of LLM orchestration, pipeline design, and vector search.

Ecosystem and Integration

Both LangChain and Haystack integrate with popular tools used in data science, including:

LangChain: Streamlit, Zapier, Airflow, LlamaIndex, and LangServe for deploying chains as APIs.

Haystack: Streamlit, FastAPI, Docker, OpenSearch, and Haystack UI for rapid deployment.

LangChain’s flexibility makes it easier to stitch together different APIs and microservices, while Haystack offers a more cohesive set of tools out of the box.

With the boom in AI startups and enterprise adoption of LLMs, especially in tech hubs like Bangalore, there is a growing demand for professionals skilled in these frameworks. Many learners now consider a Data Science Course in Bangalore that covers such tools to be essential for staying competitive.

Conclusion: Which Framework Should You Choose?

Both LangChain and Haystack are powerful frameworks, but the right choice depends on your goals.

Choose LangChain if:

- You are building experimental or research-heavy projects.

- Your application requires dynamic tool selection or complex reasoning.

- You want fine-grained control over agent behaviour and decision-making.

Choose Haystack if:

- You are deploying scalable, production-ready search or QA systems.

- Your focus is on enterprise-grade performance and stability.

- You need a reliable tool with a robust API and pipeline structure.

Ultimately, the two frameworks are not mutually exclusive. Many organisations and developers use both, depending on the needs of each project. As LLM technologies continue to evolve, being comfortable with these tools will become a major advantage for anyone in the data science profession.

For aspiring professionals, mastering these frameworks through structured learning—such as Data Scientist Classes—can open doors to exciting roles in AI development, search engineering, and NLP-based analytics.

For more details visit us:

Name: ExcelR – Data Science, Generative AI, Artificial Intelligence Course in Bangalore

Address: Unit No. T-2 4th Floor, Raja Ikon Sy, No.89/1 Munnekolala, Village, Marathahalli – Sarjapur Outer Ring Rd, above Yes Bank, Marathahalli, Bengaluru, Karnataka 560037

Phone: 087929 28623

Email: enquiry@excelr.com